Cohort.AI

A healthcare technology startup

In 2018 I enjoyed a successful engineering contract in the healthcare space. I’ve always found medicine fascinating and have a long-standing habit of devouring biology and medical journal articles on countless topics out of curiosity. What this client needed was a web-based front end for a service they were building - a well defined problem I was confident I could solve for them.

The startup was called Cohort.AI (which went on to become Engooden Health.) Cohort’s small tech team was geographically dispersed in the US, so even though this was pre-pandemic, the primary work mode was remote. One of my teammates, though, was as local as could be — my friend Michael Nossal, who lives close enough to run into on a dog walk (or run!) Sometimes he would bike to a coworking space, but we usually met in his cool little back yard office.

Finding candidates for clinical trials

Mike, an NLP and AI engineer, told me about a compelling new project Cohort was spinning up. The purpose of the project was to automate and streamline the process of seeking candidates for clinical trials. There are always countless trials seeking candidates, who get funneled into a well-defined process that seeks informed consent. Candidates are selected before being contacted, and that was the step this startup wanted to streamline. From there, the ones who respond positively are further screened, and may agree to participate in a research study.

Clinical trials are essential to medical research, and they can be very expensive to run - as in billions, so the stakes are high. The candidates are all human - no mice need apply at this phase - but beyond that, the candidate requirements can be quite specific and difficult to fulfill. Some clinical trials fail to move forward because of a lack of eligible candidates. What a shame that is, when those candidates are out there, but are not being found.

Researchers can hire consultants to wade through medical histories to source candidates for these trials, but these highly-trained consultants are not cheap, and their time is limited. The candidates, meanwhile, are often people with medical conditions who would be grateful for the chance to participate in something that could contribute to a cure for themselves or others.

Do you have a doctor’s note?

The consultants use tools to search electronic health records, but they were systematically missing a lot of candidates with medical information that was not being stored in a searchable form. The health records contained doctor’s notes, test results and other unstructured text that contained a wealth of additional information that was otherwise not coded. Thankfully these notes were already in text form, not the jumble of indecipherable hand-scrawled squiggles some doctors are known for. But the text was free-form English, not respecting any particular syntax or format.

Thus it would require natural language processing to extract useful information from these notes. Mike, an NLP specialist, was working that part of the problem. His work was informed by various lexicons of medical terminology covering diseases, pharmaceuticals, etc.

Wanted: A web front end

At this time, the company was already achieving success with an AI-based approach to chronic care management. They wanted to launch an additional line of business for clinical trials, but everything they had implemented thus far was on the back end. They needed a GUI to manually prototype, test and build out their own service. This would improve their own team’s productivity on day one by relieving the sole engineer who knew how to hand-author queries. They also needed a vehicle to showcase their technology to prospective customers, partners and investors.

Cohort had previously hired a designer who provided a Sketch prototype of an interactive complex query builder and results explorer with list and detail views. This needed some fleshing out but it was a very solid start..

The Cohort engineering team was quite solid, but they were not front-end engineers. Patrick Austermann, my manager, knew enough about it to make sound enginering judgments (including hiring me!) but nobody in the company had the bandwidth or inclination to jump in and build a front end themselves.

As with Tousled, I didn’t get this job through a recruiter or contract agency. I was essentially the Account Manager for my own contract as well as the lead/senior/what-have-you front-end engineer. The team clearly expressed their needs, and I was quite confident I could meet them all with excellence. I did a bid, wrote a proposal describing what I would do, and negotiated it, just like I had done on behalf of FILTER at Microsoft. The prospect of contributing to public health in some way was very attractive to me.

A Node.js server + a front end with React + TypeScript + MaterialUI

I recommended building the web site with React using TypeScript. I took a fresh survey of the tools landscape and suggested using the Material UI library, based on Google’s Material Design, which seemed well-matched to what Cohort’s designer came up with. I selected well-known favorite libraries, Redux, redux-saga, plus my own best practices for state management. I got started on the front end while Mike built out the API to their service.

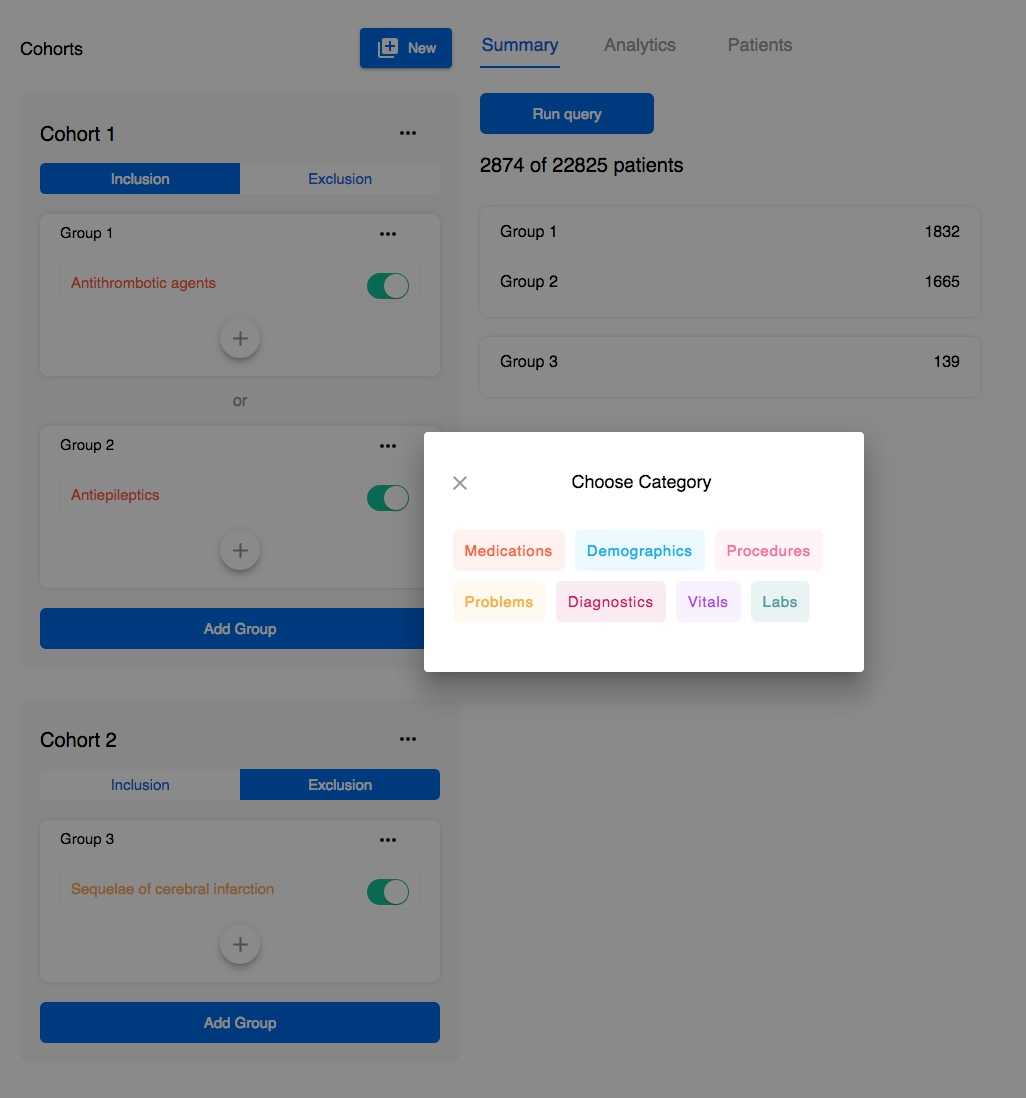

The core UI flow started with a query builder. You could construct simple or complex queries to find potential clinical trial participants using inclusion and exclusion criteria. You could run these queries to see the list of results, and then explore and drill into these results in various ways, typical list and detail views. You could then iteratively refine the queries to get the result size and quality you’re seeking. This produced a candidate list that fed into the next phase of the process.

There were other features around this core flow, but that’s the essence of the tool. With this, you would have the ability to understand how your query criteria affect the patient population numbers in real time.

The queries themselves involved medical terminology like diseases and pharmaceuticals, which are often long and challenging to spell correctly. This is a great context for autocompletion, which I’d implemented many times before.

Mike and I agreed on the details of the query API that my code would use to talk to his NLP-informed back end and worked up some tests. I put together a lightweight Node Express application server on a Linux instance to support front-end features like autocompletion, and to proxy the queries and cache some results.

Success

This role wasn’t intended to be a long-term engagement. I made sure every requirement was met, and left them with efficient, reliable, well-organized, well-documented code. The front end I built closely resembled their original vision, brought to life. I cached as much as I could on the client side to make the experience zippy, as if it were running locally.

I am proud of the fact that what I built continued to meet their needs for quite some time with no additional help from me. They were skilled engineers, but not web developers per se, so I parameterized a lot of the UI in such a way that they could iterate and refine the querying behavior and other features just by modifying a bunch of static data in a separate file. That worked out very well.

The company went on to achieve success with their chronic care management business. They did not end up going big with the idea of using NLP to derive value from the doctor’s notes, but it was an excellent idea. Amazon certainly thinks so, as such a service is now included in their vast AWS portfolio.